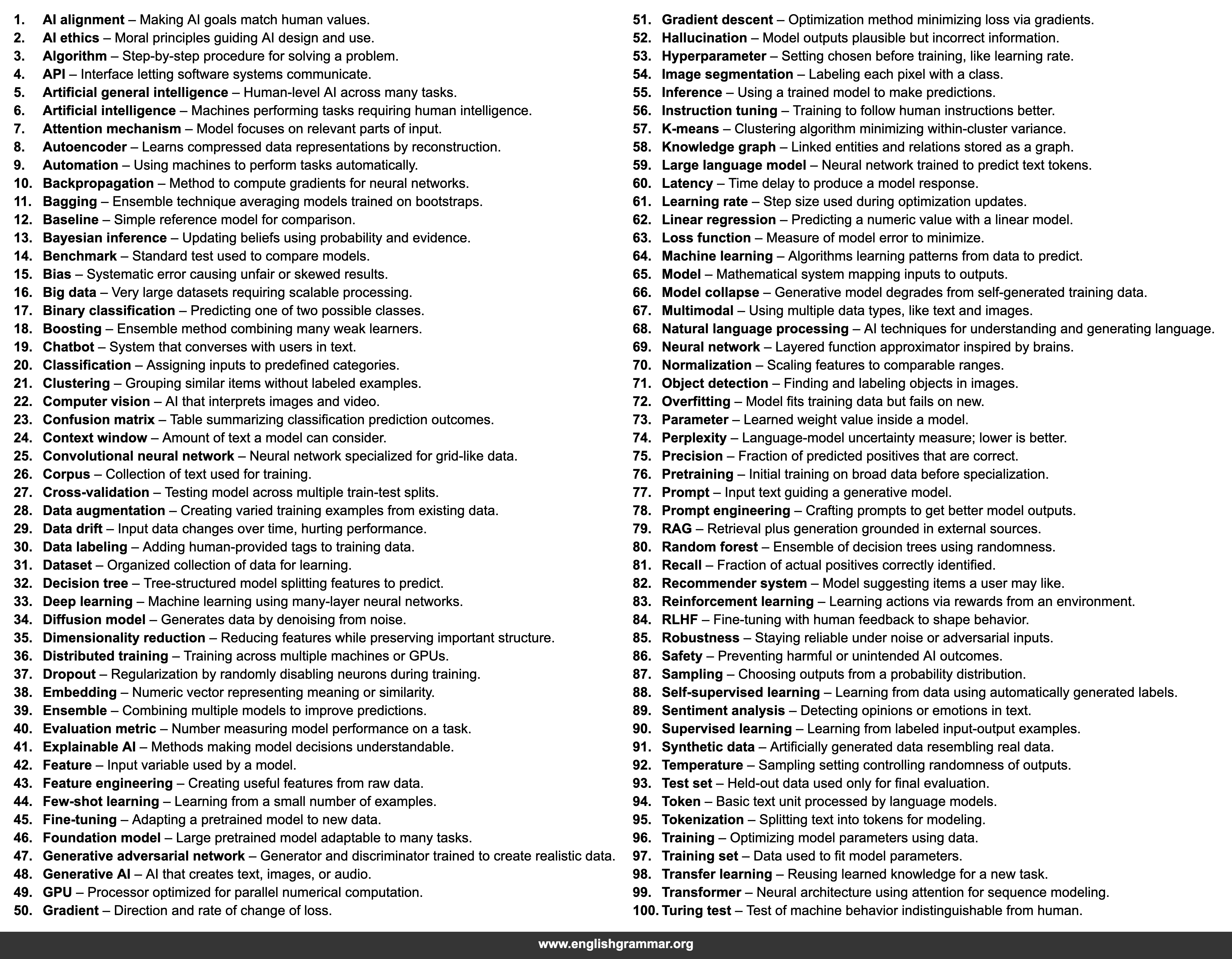

| No. | Term | Definition |

|---|---|---|

| 1. | AI alignment | Making AI goals match human values. |

| 2. | AI ethics | Moral principles guiding AI design and use. |

| 3. | Algorithm | Step-by-step procedure for solving a problem. |

| 4. | API | Interface letting software systems communicate. |

| 5. | Artificial general intelligence | Human-level AI across many tasks. |

| 6. | Artificial intelligence | Machines performing tasks requiring human intelligence. |

| 7. | Attention mechanism | Model focuses on relevant parts of input. |

| 8. | Autoencoder | Learns compressed data representations by reconstruction. |

| 9. | Automation | Using machines to perform tasks automatically. |

| 10. | Backpropagation | Method to compute gradients for neural networks. |

| 11. | Bagging | Ensemble technique averaging models trained on bootstraps. |

| 12. | Baseline | Simple reference model for comparison. |

| 13. | Bayesian inference | Updating beliefs using probability and evidence. |

| 14. | Benchmark | Standard test used to compare models. |

| 15. | Bias | Systematic error causing unfair or skewed results. |

| 16. | Big data | Very large datasets requiring scalable processing. |

| 17. | Binary classification | Predicting one of two possible classes. |

| 18. | Boosting | Ensemble method combining many weak learners. |

| 19. | Chatbot | System that converses with users in text. |

| 20. | Classification | Assigning inputs to predefined categories. |

| 21. | Clustering | Grouping similar items without labeled examples. |

| 22. | Computer vision | AI that interprets images and video. |

| 23. | Confusion matrix | Table summarizing classification prediction outcomes. |

| 24. | Context window | Amount of text a model can consider. |

| 25. | Convolutional neural network | Neural network specialized for grid-like data. |

| 26. | Corpus | Collection of text used for training. |

| 27. | Cross-validation | Testing model across multiple train-test splits. |

| 28. | Data augmentation | Creating varied training examples from existing data. |

| 29. | Data drift | Input data changes over time, hurting performance. |

| 30. | Data labeling | Adding human-provided tags to training data. |

| 31. | Dataset | Organized collection of data for learning. |

| 32. | Decision tree | Tree-structured model splitting features to predict. |

| 33. | Deep learning | Machine learning using many-layer neural networks. |

| 34. | Diffusion model | Generates data by denoising from noise. |

| 35. | Dimensionality reduction | Reducing features while preserving important structure. |

| 36. | Distributed training | Training across multiple machines or GPUs. |

| 37. | Dropout | Regularization by randomly disabling neurons during training. |

| 38. | Embedding | Numeric vector representing meaning or similarity. |

| 39. | Ensemble | Combining multiple models to improve predictions. |

| 40. | Evaluation metric | Number measuring model performance on a task. |

| 41. | Explainable AI | Methods making model decisions understandable. |

| 42. | Feature | Input variable used by a model. |

| 43. | Feature engineering | Creating useful features from raw data. |

| 44. | Few-shot learning | Learning from a small number of examples. |

| 45. | Fine-tuning | Adapting a pretrained model to new data. |

| 46. | Foundation model | Large pretrained model adaptable to many tasks. |

| 47. | Generative adversarial network | Generator and discriminator trained to create realistic data. |

| 48. | Generative AI | AI that creates text, images, or audio. |

| 49. | GPU | Processor optimized for parallel numerical computation. |

| 50. | Gradient | Direction and rate of change of loss. |

| 51. | Gradient descent | Optimization method minimizing loss via gradients. |

| 52. | Hallucination | Model outputs plausible but incorrect information. |

| 53. | Hyperparameter | Setting chosen before training, like learning rate. |

| 54. | Image segmentation | Labeling each pixel with a class. |

| 55. | Inference | Using a trained model to make predictions. |

| 56. | Instruction tuning | Training to follow human instructions better. |

| 57. | K-means | Clustering algorithm minimizing within-cluster variance. |

| 58. | Knowledge graph | Linked entities and relations stored as a graph. |

| 59. | Large language model | Neural network trained to predict text tokens. |

| 60. | Latency | Time delay to produce a model response. |

| 61. | Learning rate | Step size used during optimization updates. |

| 62. | Linear regression | Predicting a numeric value with a linear model. |

| 63. | Loss function | Measure of model error to minimize. |

| 64. | Machine learning | Algorithms learning patterns from data to predict. |

| 65. | Model | Mathematical system mapping inputs to outputs. |

| 66. | Model collapse | Generative model degrades from self-generated training data. |

| 67. | Multimodal | Using multiple data types, like text and images. |

| 68. | Natural language processing | AI techniques for understanding and generating language. |

| 69. | Neural network | Layered function approximator inspired by brains. |

| 70. | Normalization | Scaling features to comparable ranges. |

| 71. | Object detection | Finding and labeling objects in images. |

| 72. | Overfitting | Model fits training data but fails on new. |

| 73. | Parameter | Learned weight value inside a model. |

| 74. | Perplexity | Language-model uncertainty measure; lower is better. |

| 75. | Precision | Fraction of predicted positives that are correct. |

| 76. | Pretraining | Initial training on broad data before specialization. |

| 77. | Prompt | Input text guiding a generative model. |

| 78. | Prompt engineering | Crafting prompts to get better model outputs. |

| 79. | RAG | Retrieval plus generation grounded in external sources. |

| 80. | Random forest | Ensemble of decision trees using randomness. |

| 81. | Recall | Fraction of actual positives correctly identified. |

| 82. | Recommender system | Model suggesting items a user may like. |

| 83. | Reinforcement learning | Learning actions via rewards from an environment. |

| 84. | RLHF | Fine-tuning with human feedback to shape behavior. |

| 85. | Robustness | Staying reliable under noise or adversarial inputs. |

| 86. | Safety | Preventing harmful or unintended AI outcomes. |

| 87. | Sampling | Choosing outputs from a probability distribution. |

| 88. | Self-supervised learning | Learning from data using automatically generated labels. |

| 89. | Sentiment analysis | Detecting opinions or emotions in text. |

| 90. | Supervised learning | Learning from labeled input-output examples. |

| 91. | Synthetic data | Artificially generated data resembling real data. |

| 92. | Temperature | Sampling setting controlling randomness of outputs. |

| 93. | Test set | Held-out data used only for final evaluation. |

| 94. | Token | Basic text unit processed by language models. |

| 95. | Tokenization | Splitting text into tokens for modeling. |

| 96. | Training | Optimizing model parameters using data. |

| 97. | Training set | Data used to fit model parameters. |

| 98. | Transfer learning | Reusing learned knowledge for a new task. |

| 99. | Transformer | Neural architecture using attention for sequence modeling. |

| 100. | Turing test | Test of machine behavior indistinguishable from human. |